AI Agent based Knowledge Network

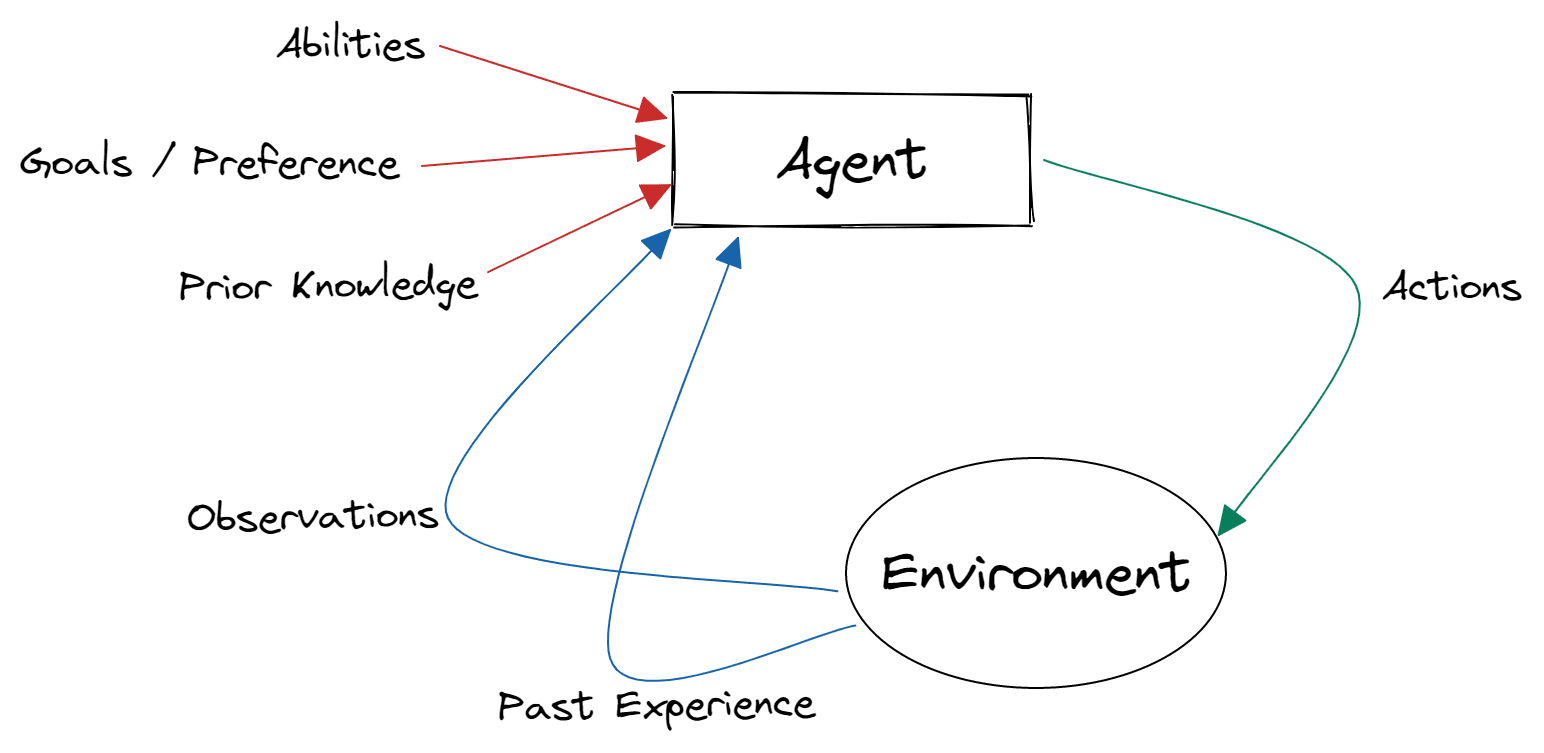

The technology absorbs information, filters out the noise and processes useful information to create knowledge that can be used by the task specific AI Models.

The knowledge network system is capable of monitoring niche events and information and use the AI to

- Dynamically process and filter the information that are relevant and useful to readers

- Produce comprehensive summary and report for easy review

- Be ready to collaborate information for building AI model with cross-disciplined features

- Communicate with user interactively for findings and specific questions using the Generative AI technology

Application: Auto Investment Research Assistant Agent

The technology is used for investment management companies to monitor their investment from anywhere at any time. We also use this technology to provide soft news content for e-commerce provider to generate more traffic, customer loyalty and gauging future demand for certain products.

Application: Auto Medical Research Assistant Agent

The reference on the right to health benefit of collagen from a cosmetic ad is interpreted and translated by machine and verified against various sources of medical journal. After the machine processes the content from complex sentence structure of the ad, it will extract the key message from the ad and validate the content against the fuzzy medical experts system.

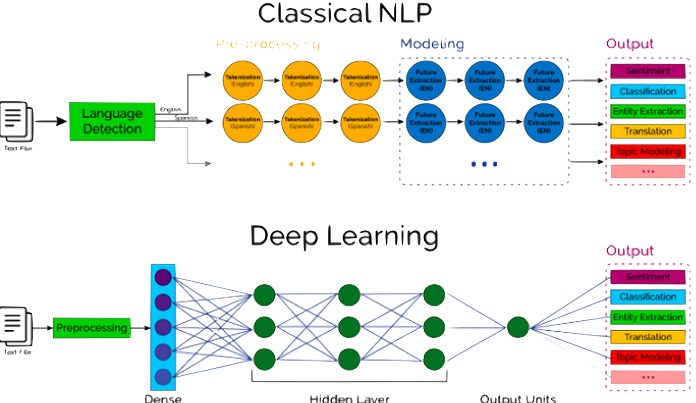

Deep Learning based Stock Picking Technology

A deep learning technology constructs an optimal portfolio based on market data and other kinds of data

The deep learning technology combines traditional modern portfolio management theory and factors such as market fluctuation, economic indicators, company fundamentals, news on the wire and social media data. The model continuously learns the world and evolves the model to improve its accuracy and risk adjusted return. We use various self-developed and open sourced LLM to identify investment risk at real time.

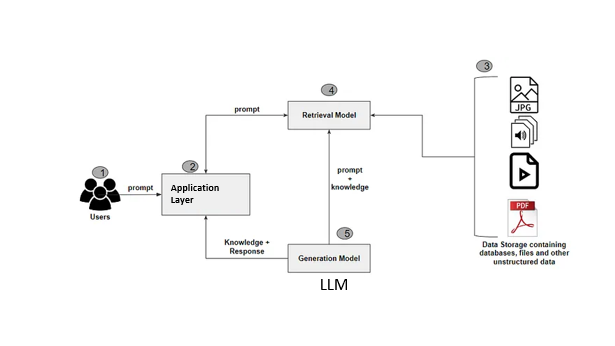

Retrieval-Augmented Generation (RAG)

RAG framework allows AI model to learn from given set of data without limitation of the knowledge cutoff date from the Large Language Model

Utilizing the retrieval-augmented generation (RAG) framework alongside a Large Language Model (LLM) presents an innovative approach to developing a bespoke model tailored precisely to your specific use case. By integrating RAG, which harnesses the power of information retrieval, with a powerful generative model like an LLM, you can effectively fine-tune the model's parameters to align with the nuances and requirements of your application. This hybrid framework enables dynamic adaptation to diverse datasets and contexts, allowing for the extraction of relevant information from large corpora and the generation of coherent and contextually appropriate responses. Whether it's in customer service, content creation, or knowledge extraction, leveraging RAG with an LLM offers unparalleled flexibility and performance, culminating in a model uniquely attuned to address your unique needs and challenges.

Application: Q&A Chatbot with finite set of knowledge

RAG application chatbot is revolutionizing due diligence by seamlessly sifting through vast repositories of documents and extracting relevant information. It efficiently answers queries by integrating information retrieval with generative capabilities, offering precise responses tailored to the user's needs.

Application: Operational Manual Review

The RAG framework application transforms operational procedures by efficiently parsing extensive manuals, extracting vital information, and seamlessly responding to user inquiries with precision. It combines advanced retrieval techniques with generative capabilities, ensuring users receive accurate and tailored guidance for operational tasks.